The Geometry of Concepts: Sparse Autoencoder Feature Structure

Mike's Daily Deep Learning Paper Review - 03.03.25

This article explores how sparse autoencoders (SAEs) represent and structure concepts in LLMs. The researchers analyze this structure at three hierarchical scales: atomic, cortical, and galactic. The study makes several comparisons between the embedding space of language models and the structure of the brain, though it is clear that LLMs do not think exactly like humans.

Methodology:

To refresh, SAEs are a tool for studying the interpretability of LLMs. They are trained to reconstruct activations of a specific layer in a model using only a small subset of its features. This sparsity constraint forces the SAE to represent each neuron as a linear combination of a small number of semantic features, with each feature encoding a specific concept (interpretable). In other words, the SAE learns a dictionary of feature vectors (embeddings) in which each neuron is selectively activated for specific semantic patterns.

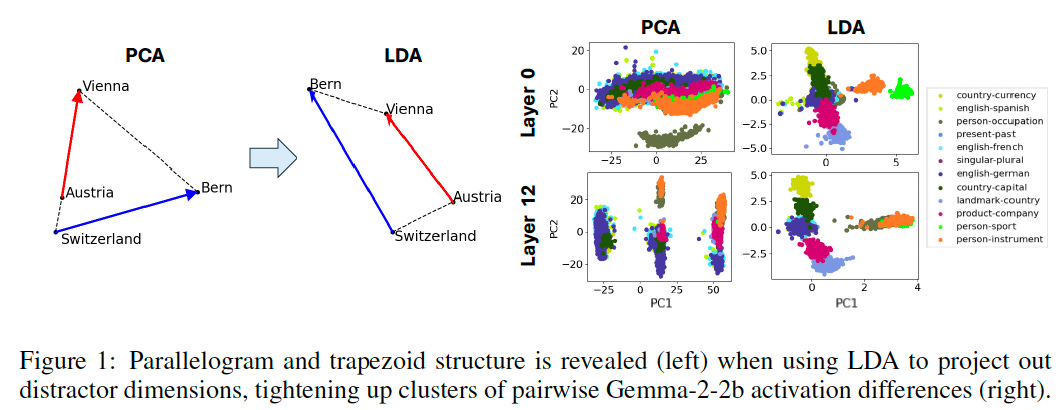

The researchers use SAEs to extract semantic features from the representations of concepts in LLMs. The study focuses on analyzing the geometric structure of these representations at three scales. To uncover this structure, the researchers use LDA (Linear Discriminant Analysis) to remove global “distraction” directions in the embedding space, such as word length, which could obscure semantic concept relations. This step is especially important at the atomic level, where analogical relationships become clearer after removing distracting influences.

Atomic Level: "Crystals" and Geometric Patterns

At the smallest scale, the study identifies "crystals"—geometric structures such as trapezoidal parallelograms—within the multi-dimensional feature space. These structures generalize well-known relational concepts such as (man - woman) :: (king - queen). The researchers note that the quality of these geometric patterns improves significantly when global distraction directions, like word length, are removed using LDA.

Cortical Level: Spatial Modularity and "Lobes"

At a medium scale, the study reveals spatial modularity within the feature space of the SAE. Features related to specific domains, such as mathematics and coding, group together to form separate "lobes," similar to functional regions seen in human brain fMRI scans. The researchers use various measures to quantify the spatial locality of these lobes and find that features appear together with higher density than expected in random feature geometry. These findings suggest that the SAE organizes conceptual features in a way that reflects functional specialization.

Galactic Level: Large-Scale Structures and Eigenvalue Distribution

At the largest scale, the study discovers that the distribution of point cloud data is anisotropic (varying in different directions) and characterized by a Power Law of eigenvalues, with steepest changes (between layers) observed in the middle layers of the network. This indicates that the complexity and variation of data representations are not uniform across layers, with middle layers capturing finer variations in the data. The authors also analyze how the entropy of clusters (in point clouds) changes across different layers of the model, providing insights into the hierarchical structure of concept representations within the model.

https://arxiv.org/abs/2410.19750