This survey paper reviews the rapid evolution of Neuro-Symbolic AI (NSAI) over the last half-decade. Drawing from a methodically filtered corpus of 158 peer-reviewed works, the authors provide a taxonomically grounded, thematically structured map of where the research community has concentrated its efforts and where it has conspicuously not.

A decisive 63% of the literature reviewed pertains to learning and inference, underscoring the field’s present commitment to blending statistical learning with logic-based constraints. Techniques such as Logical Neural Networks, symbolic priors in few-shot learning, and loss functions infused with semantic penalties exemplify attempts to ground neural learning in symbolic structure. These approaches not only improve sample efficiency and generalization but begin to bridge the conceptual gap between inductive pattern extraction and deductive reasoning.

Knowledge representation emerges as another strong pillar, accounting for 44% of the literature. The best work in this area transcends simple graph embeddings, aiming instead to encode dynamic, structured, and commonsense knowledge into systems that can adapt over time. Systems like NeuroQL point to an interest in building models that are not just knowledgeable, but epistemologically structured meaning models that can “do more with less” while maintaining semantic alignment.

Closely interlinked is logic and reasoning (35%), where models such as DeepStochLog and Logical Credal Networks attempt to recast logic programming in neural terms. These contributions enable hybrid reasoning over uncertainty, a core capacity if Neuro-Symbolic systems are to function in complex, partially observable environments. The coupling of probabilistic logic with symbolic control architectures is a recurring and fruitful pattern.

Despite growing deployment of AI systems in critical applications, only 28% of the reviewed works tackle explainability and trustworthiness. This imbalance is not merely statistical but structural. Most advances focus on post hoc summarization, semantic-level revision, or improved document-level inference. Notable contributions include Braid, a reasoner that blends probabilistic and symbolic rules to reduce brittleness, and FactPEGASUS, which aligns LLM-generated summaries with factuality through hybrid training paradigms.

However, the broader vision namely building systems whose reasoning is not just intelligible after the fact but inherently transparent still remains elusive. There is little systematic exploration of how explainability can be baked into learning objectives, model architecture, or inference procedures. The result is a disconnect: systems grow increasingly powerful, yet their inner workings remain opaque to their creators.

Perhaps the most significant contribution of the paper is its elevation of Meta-Cognition from a footnote in prior taxonomies to a defined and urgent subdomain. Only 5% of the papers reviewed touch this theme, yet the concept of self-monitoring, adaptive control, and introspective reasoning is arguably the missing piece in current Neuro-Symbolic architectures.

The paper adopts a rigorous definition of Meta-Cognition as the capacity to regulate and reflect on internal cognitive processes. In practical terms, this encompasses symbolic controllers layered atop RL agents, integrations of LLMs with cognitive architectures (e.g., ACT-R, Soar), and theoretical frameworks aligned with the Common Model of Cognition. These works, though few, gesture toward a future in which AI systems are not only reactive but strategically self-aware and capable of managing attention, choosing among inference strategies, and self-correcting in unfamiliar contexts.

Importantly, the authors push beyond Kahneman’s dual-system metaphor, noting that human cognition is neither binary nor layered in isolation but a densely interconnected “system of systems.” To build Neuro-Symbolic agents that mirror human adaptability, Meta-Cognition must not be an afterthought; it must be the glue binding perception, reasoning, learning, and communication.

One of the most revealing observations is the rarity of work that integrates all four core domains: learning and inference, logic and reasoning, knowledge representation, and explainability. The only flagship example is AlphaGeometry, a system that synthesizes millions of geometry problems using a neural generator and then guides a symbolic solver using large-scale pretraining. The system represents a blueprint: domain-specific expertise, procedural abstraction, symbolic tractability, and model guidance through learned heuristics. However, it remains an outlier.

The limited overlap between explainability and other domains is especially concerning. Integration remains low, suggesting that while individual capabilities are advancing, their coordination into coherent, trustworthy systems is still in its infancy. If Neuro-Symbolic AI is to realize its promise as a human-aligned intelligence paradigm, this fragmentation must be addressed.

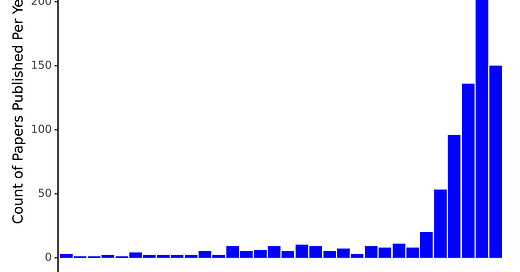

Methodologically, the paper’s filtering approach is a strength in itself. Of 1,428 initial papers, only 158 made the final cut, selected for relevance, peer review status, and the availability of open-source code. This stringent filtering underscores a deep concern with reproducibility and practical utility, ensuring that the findings reflect not just speculative ideas but tested, shareable systems.

By including only papers with publicly available code (with exception for Meta-Cognition due to scarcity), the authors offer a baseline for transparency and reuse. This also implicitly critiques the still-too-common practice of publishing complex Neuro-Symbolic proposals without operational instantiations.

This review represents a major milestone in mapping the Neuro-Symbolic AI landscape. It identifies what is working: structured learning with symbolic priors, logic-based generalization, and hybrid representation frameworks. It also calls out what is missing: native explainability, introspective control, and integrated systems capable of autonomous adaptation.

Above all, it posits Meta-Cognition not as an aspirational ideal but as a foundational requirement. If Neuro-Symbolic AI aims to move beyond brittle heuristics and monolithic networks, it must grapple with the architecture of thought itself and not just its outputs. This is a field on the cusp. The research community has assembled the pieces. The question now is whether it can build the system.

https://arxiv.org/abs/2501.05435