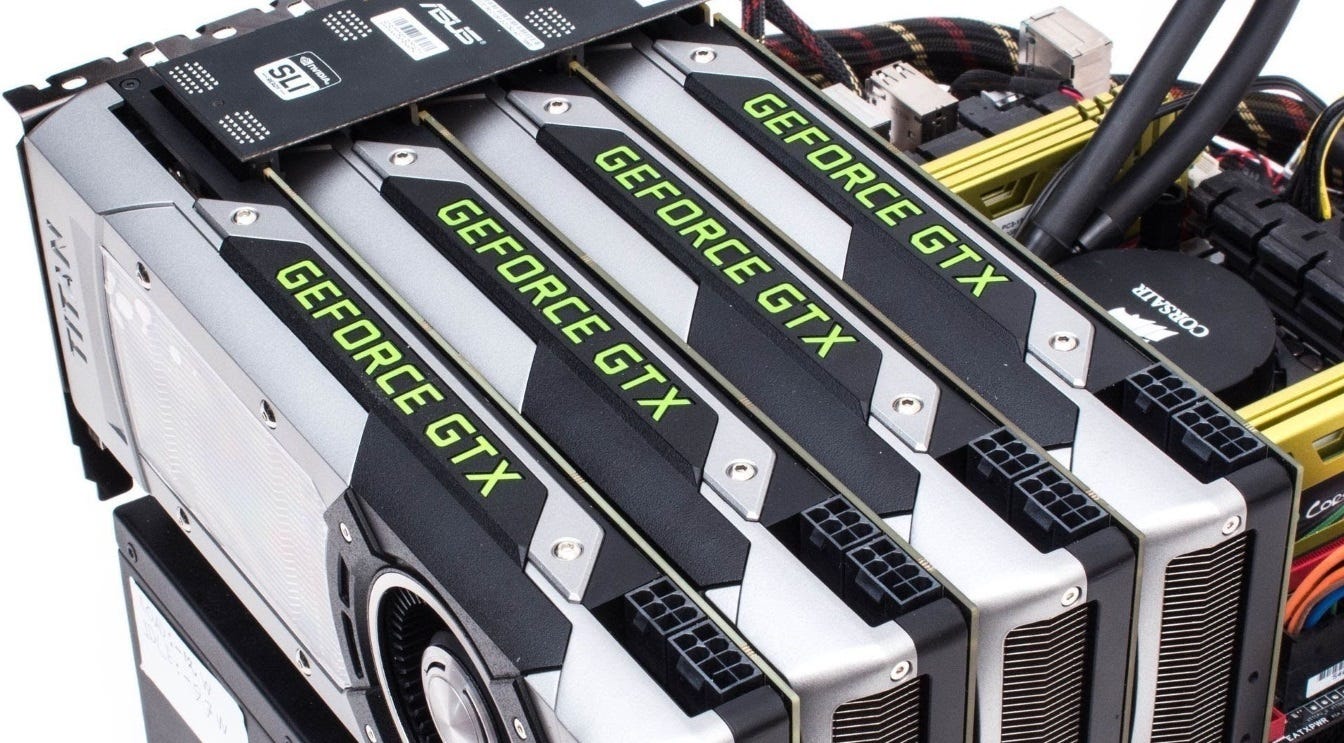

Inference Optimization Techniques for LLMs in Multi-GPU Setups

Accelerate your data generation with LLMs in multi-gpu regimes

Large Language Models (LLMs) offer groundbreaking capabilities, but their substantial size poses significant hurdles to efficient inference, especially when using batch processing across multiple GPUs.

This review explores various optimization techniques designed to accelerate LLM inference, specifically targeting batch inference within distributed GPU setups.

1. Quantization:

Concept: Quantization reduces the numerical precision of model weights and activations, moving from higher precision formats like FP32 to lower precision formats such as INT8, INT4, or even binary.

Techniques:

Post-Training Quantization(PTQ) : This method quantizes a pre-trained model directly, without any retraining. It's straightforward to implement but may lead to some accuracy reduction.

Mixed-Precision Quantization: This approach strategically uses different precision levels for various parts of the model, carefully balancing performance gains with acceptable accuracy.

Multi-GPU Considerations: Quantization is highly beneficial in multi-GPU environments. By decreasing the memory footprint and bandwidth demands, it allows for larger batch sizes and faster data transfer between GPUs. Libraries like TensorRT and ONNX Runtime are crucial, as they offer optimized quantization implementations specifically designed for multi-GPU inference.

Challenges: A primary challenge is potential accuracy loss, particularly at extremely low precision levels. For PTQ, the selection and preparation of calibration data are critical to minimize this loss.

2. Pruning:

Concept: Pruning involves strategically removing redundant or less critical connections (weights) within the LLM.

Techniques:

Weight Pruning: This technique selectively sets individual weights to zero based on criteria like their magnitude or assessed importance.

Layer/SubNetwork Pruning: More aggressive, this method removes entire layers or sub-networks, resulting in a more structurally sparse model.

Structured Pruning: This refines pruning further by removing specific patterns of weights or filters, often leading to sparsity that is more readily accelerated by specialized hardware.

Multi-GPU Considerations: Pruning is valuable in multi-GPU setups as it reduces the overall model size and the computational workload. This directly translates to improved inference throughput. Modern GPUs, particularly NVIDIA's Ampere and Hopper architectures, include hardware acceleration for sparse tensor operations, further enhancing the benefits of pruning.

Challenges: The main challenge lies in accurately identifying and removing weights that are truly unimportant without causing a significant drop in model accuracy. Careful selection of pruning criteria and strategies is essential. Furthermore, while sparse matrix operations can be accelerated, they may still be less efficient than dense operations if not properly optimized.

3. Knowledge Distillation:

Concept: Knowledge distillation involves training a smaller, more efficient "student" model to replicate the behavior and outputs of a much larger "teacher" model.

Techniques:

Soft Label Distillation: This uses the probability distributions produced by the teacher model as the training targets for the student, transferring richer information than just hard labels.

Feature-Based Distillation: This more advanced technique transfers intermediate representations from the teacher model to guide the student's learning, ensuring it mimics the teacher's internal processing.

Multi-GPU Considerations: Distillation is particularly useful for multi-GPU deployment when you aim to use smaller, faster models on resource-limited GPUs for inference. The large teacher model can be utilized for offline tasks or for initially training the student in a powerful multi-GPU environment.

Challenges: Designing effective distillation strategies and student architectures is key. The inherent capacity limitations of the student model may prevent it from perfectly mirroring the teacher's full knowledge and performance.

4. Optimized Kernels and Libraries:

Concept: This optimization focuses on using highly efficient, hardware-optimized implementations of fundamental operations common in LLMs, such as matrix multiplication, convolution, and attention mechanisms.

Techniques:

Custom CUDA Kernels: Developing specialized kernels tailored for specific LLM operations can yield significant performance improvements by directly leveraging GPU hardware.

Libraries: Utilizing highly optimized libraries like cuBLAS, cuDNN, TensorRT, and Triton is crucial. These libraries provide pre-built, highly tuned routines for core operations.

Fused Kernels: Combining multiple operations into single, fused kernels reduces memory access overhead and kernel launch overhead, leading to faster execution.

Multi-GPU Considerations: Optimized kernels and libraries are essential for maximizing GPU performance in multi-GPU inference. Libraries like TensorRT and Triton are designed with multi-GPU support and often include automatic kernel fusion capabilities to streamline execution across multiple GPUs.

Challenges: Optimizing kernels requires deep expertise in GPU programming and architecture. Kernel optimization is also highly hardware-dependent, meaning kernels may need to be tuned for specific GPU models to achieve peak performance.

5. Architectural Optimizations:

Concept: This approach involves modifying the LLM's architecture itself to inherently reduce computational complexity and memory footprint.

Techniques:

Efficient Attention Mechanisms: Replacing standard, computationally intensive attention mechanisms with more efficient approximations like sparse attention or linear attention can significantly reduce the quadratic complexity of attention.

Reduced Model Depth and Width: Using smaller models with fewer layers and fewer hidden units per layer directly decreases the number of parameters and computations required for inference.

Layer Fusion: Combining multiple sequential layers into a single, more complex layer can reduce memory access and improve data locality during inference.

Multi-GPU Considerations: Architectural optimizations are broadly beneficial in multi-GPU settings. By reducing the overall model size and computational demands, they enable the use of larger batch sizes and faster overall inference speeds across multiple GPUs.

Challenges: The primary challenge is maintaining model accuracy while aggressively reducing architectural complexity. Architectural changes require careful design and extensive evaluation to ensure that performance gains are not offset by unacceptable accuracy losses.

6. Batching and Pipelining:

Concept: These techniques are fundamental to efficient multi-GPU inference. Batching processes multiple inference requests concurrently, while pipelining overlaps computation and communication to hide latency.

Techniques:

Static Batching: This simpler form groups incoming requests into fixed-size batches before processing.

Dynamic Batching: More sophisticated, this dynamically groups requests into batches based on factors like sequence length and request similarity to optimize resource utilization and minimize padding.

Tensor Parallelism: This distributes the model's weight tensors across multiple GPUs, allowing for very large models to be processed by dividing the computational load.

Pipeline Parallelism: This divides the LLM into sequential stages and assigns each stage to a different GPU. Input batches flow through the pipeline, with GPUs working in parallel on different stages of the same or different batches.

Sequence Parallelism: Especially relevant for long sequences, this technique distributes the processing of the sequence dimension across multiple GPUs, reducing the memory footprint per GPU.

Multi-GPU Considerations: Batching and pipelining are critical for achieving high GPU utilization and maximizing throughput in multi-GPU environments. Tensor and pipeline parallelism are essential for scaling LLM inference to large clusters of GPUs. Sequence parallelism becomes increasingly important when dealing with very long input sequences.

Challenges: Implementing effective batching and pipelining in multi-GPU systems presents challenges like load balancing across GPUs, managing communication overhead between GPUs, and handling synchronization issues. Dynamic batching adds complexity in scheduling and resource management. Pipeline parallelism can introduce latency due to pipeline stalls if stages are not perfectly balanced.

7. Memory Optimization:

Concept: Memory optimization focuses on reducing the memory footprint of LLMs and minimizing bandwidth requirements during inference.

Techniques:

Offloading: When GPU memory is limited, offloading less frequently used model weights and activations to CPU memory or faster NVMe storage can free up valuable GPU memory.

Memory Pooling: Allocating a fixed-size pool of memory and reusing it for different operations throughout the inference process reduces memory allocation overhead and fragmentation.

Multi-GPU Considerations: Memory optimization is paramount for fitting large LLMs and large inference batches within the often-constrained memory of GPUs. Techniques like offloading are essential to handle models that exceed the capacity of individual GPUs, even in multi-GPU setups.

Challenges: Offloading introduces communication overhead as data needs to be moved between GPU and CPU/NVMe. Effective memory management and careful profiling are crucial to balance memory savings with potential performance impacts.

8. Compilation and Runtime Optimization:

Concept: This optimization layer focuses on optimizing the model's execution graph and the runtime environment for the specific hardware it will run on.

Techniques:

Graph Optimization: This includes techniques like fusing operators (combining multiple operations into one), eliminating redundant operations, and optimizing data layout in memory to improve access patterns.

Just-in-Time (JIT) Compilation: JIT compilation compiles the model specifically at runtime, tailoring the compiled code to the exact input shapes and the target hardware architecture.

Runtime Tuning: This involves automatically searching for and applying optimal hyperparameters for the runtime environment, such as batch size, number of threads used for execution, and memory allocation strategies.

Multi-GPU Considerations: Compilation and runtime optimization are highly beneficial in multi-GPU systems. They enable the system to leverage hardware-specific features of the GPUs and to optimize the overall execution graph for parallel processing across multiple GPUs. Libraries like TorchScript, ONNX Runtime, and TensorRT are critical as they provide graph optimization and JIT compilation capabilities specifically designed for efficient GPU inference, including multi-GPU scenarios.

Challenges: Compilation itself can introduce overhead, especially for very large models. Selecting the right optimization options and tuning parameters can be complex and often requires experimentation and profiling to find the best configuration for a given model and hardware setup.

Conclusion:

Optimizing LLM inference in multi-GPU environments is a multifaceted challenge requiring a combination of techniques. From model compression and architectural adjustments to highly optimized kernels and runtime systems, the optimal strategy depends on a complex interplay of factors.

These include the LLM's size, the desired accuracy level, available hardware resources, and strictness of latency requirements. Ongoing research and development are crucial to create even more efficient and scalable inference methods. This includes exploring hardware-software co-design principles and developing novel algorithms specifically tailored for distributed computing environments.