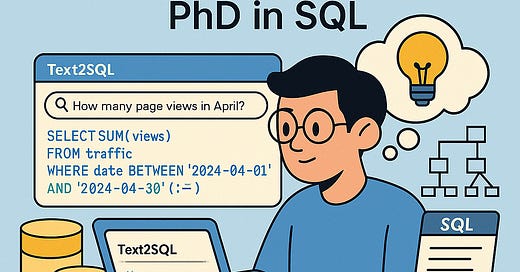

How Taboola Built a Precise Query Engine That Doesn’t Require a PhD in SQL

By Ben Shaharizad, Senior Software Engineer, Data Platform at Taboola (translated by me with the generous help of some LLM)

This post is a translation of a great post of Ben Shaharizad from Taboola, originally written in Hebrew: https://www.geektime.co.il/query-engine-use-case/

When SQL Isn't Enough

In large organizations, even a seemingly simple business question can quickly spiral into a complex data maze. A question like “How many impressions did my campaigns get in June?” demands not only knowing where the data is, but also understanding which table, database, field, and syntax will yield the answer without inadvertently triggering a terabyte-sized query.

Data exists—but it’s fragmented across dozens of databases, thousands of tables, hundreds of dashboards, and partial documentation. Names can be misleading, context isn’t always clear, and before long, it can feel like you need a PhD just to know where to start. Take “account-id”—does it refer to an advertiser, a content site, or perhaps an internal user?

If you've ever worked at a company with more than a handful of systems, you likely know the frustration: too many similar-sounding tables, confusing column names, and no clear path to the truth.

A simple answer can require half a day of detective work—and who has that time?

Enter Sage

We built Sage, our internal Text2SQL engine, to solve this. Here’s how we tackled the challenge, what worked (and didn’t), and the lessons for any organization wanting to make its data accessible, clear, and useful.

Why LLMs Alone Aren’t Enough

LLMs (Large Language Models) are brilliant at SQL—but they don’t understand your data or your business language. They won’t know that "Premium Traffic" means campaigns delivered via the Apple Stocks app. Or that publisher_id actually maps to advertiser_id.

Initially, we were hopeful. But reality quickly grounded us. Here are the three main approaches we tried before landing on what actually worked.

1. Send Everything to the LLM—What Could Go Wrong?

We naïvely started by feeding the model all our DDLs—hoping it would sift out what mattered. Spoiler: it didn’t. The prompt became enormous. The model drowned in irrelevant data, resulting in clumsy or incorrect queries. Users lost trust. Too much noise, zero context.

2. RAG on Tables and Columns

Next, we tried Retrieval-Augmented Generation (RAG)—retrieving only relevant tables or columns for a given question. Sounds smart, right?

In theory, yes. In practice, no.

Table names were often misleading (campaign_imp was outdated and irrelevant; campaign_snapshot was actually key but missed due to naming). Even relevant tables lacked the semantic info needed to form valid JOINs or interpret enum values like user_segment = 3. Without business context, even technically correct queries missed the point.

RAG works best when the retrieved data is rich with meaning (e.g., wiki paragraphs)—not when all you have are table names.

3. Maybe Queries Themselves Hold the Key?

If the schema doesn’t tell the full story, maybe real queries can? That led us to index real human-authored SQL queries alongside their natural language questions (from docs, wikis, analyst cheat sheets, etc.).

We built a new RAG system—not on table names, but on real Q&A pairs. If a user’s question matched a previously answered one, we reused the SQL, added table DDLs, and sample data to guide the model.

When questions were nearly identical, it worked beautifully. But even slight phrasing differences produced wildly different outcomes. Worse, LLMs started hallucinating enum values that didn’t exist (e.g., user_type = "gold" when that value doesn’t exist in our system).

We also saw inconsistent matches from tiny wording changes. It became clear we needed less reliance on string similarity and more on semantic understanding.

The Breakthrough: Workspaces

The real solution came when we ditched generalized RAG and embraced context-specific Workspaces. Each workspace focused on a specific business domain (e.g., traffic, revenue), with:

A handpicked set of relevant tables

Rich, human-readable JSON descriptions of table meanings, granularity, use cases, relationships, enum values, and example queries

Glossaries defining business lingo like "high quality traffic", "Motion Ads", or "Taboola Pixel events"

We stopped throwing every table at the problem and instead curated knowledge agents for each domain—each with tailored data, relationships, and terminology.

The Supervisor: Smart Routing Behind the Scenes

Users shouldn't need to pick the right agent. That’s what the Supervisor (aka PaPa Agent) is for. It identifies the context of each user query and routes it to the appropriate Workspace agent. If the topic shifts mid-conversation, it switches agents seamlessly.

To the user, it feels like talking to a single smart, coherent system.

Safety Nets and Continuous Learning

Smart queries can still crash systems—so we added:

Pre-execution EXPLAIN checks: catch syntax or performance issues before running

Error recovery: retry failed queries after parsing the error and adjusting

Security layers: restrict sensitive table access, flag anomalies

Query Recipes: best practices for performance and accuracy

All monitored and evaluated with Langfuse Pro, enabling prompt optimization and regression testing.

Did It Actually Work?

Yes—but only after rigorous testing. We:

Generated paraphrased versions of common questions

Verified if different phrasings led to similar results

Checked if critical columns or filters were present

Used actual database results to compare query outputs

The system continues to improve with each query. It’s still not perfect—but it learns and evolves constantly.

Final Thoughts

Our journey didn’t start with a fancy AI model. It started with a user need: to ask business questions without needing a PhD in SQL. We learned that LLMs need more than just data—they need context, semantics, and smart framing.

By building structured Workspaces, rich glossaries, business-aware agents, and automated supervision, we made our data more accessible and actionable.

And when a product manager, analyst, or marketer finds a critical insight in minutes—not hours—we know we did something right.