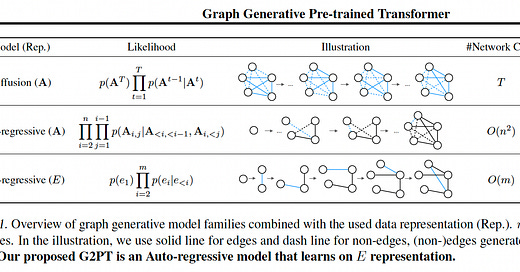

We’re used to seeing language models trained in an unsupervised fashion (typically referred to as pretraining) on text. This paper extends the concept of generative model pretraining to graphs. Essentially, it converts a graph into a kind of text—that is, a one-dimensional sequence of tokens—and trains a transformer on that sequence. However, unlike text, a graph is inherently non-linear, and representing it as a sequence is not entirely trivial.

The approach chosen by the authors seems fairly intuitive: the graph is represented as a sequence of nodes and edges. Each node is represented as a pair consisting of its category (discrete) and its index. Each edge is represented as a triplet that includes the two nodes it connects and the edge type. The order of nodes can be arbitrary (i.e., invariant to permutation), but the node order is selected using a simple algorithm: first, pick the node with the lowest degree, and from its edges, choose the one that leads to the node with the smallest degree among its neighbors. Then remove this edge and repeat the process until all edges have been removed.

Once the graph is written as a sequence of nodes and edges (with a special token separating them), positional encoding (PE) is applied. The paper uses absolute positional encoding, where each node and edge is encoded based on its position in the sequence (the paper doesn't elaborate on the exact form of PE used). Then, training proceeds similarly to a language model—that is, autoregressive prediction: predicting the next token (node or edge) given the past tokens. In short, standard generative model training.

After pretraining, the paper proposes a rejection sampling-based fine-tuning approach. Suppose we want to generate graphs that meet a specific condition, and we had some such graphs during pretraining. We begin generating graphs and collect a dataset of those that satisfy the condition. Once enough are gathered, we fine-tune the model. This process—generation, filtering, and fine-tuning—is repeated.

The authors also propose a method for training with Proximal Policy Optimization (PPO) on graphs for a given reward function. The paper suggests combining the PPO loss, the critic loss (an estimate of the value function), and the original pretraining loss described earlier.

The paper is quite interesting, but one thing that concerns me about this approach is the invariance of this representation to permutations of the nodes. I believe this requires extremely intensive training over a massive number of node permutations, especially for large graphs. Otherwise, the node representations will be sensitive to order and not particularly effective.