DL Paper Review, Time-MoE: Billion-Scale Time Series Foundation Models with Mixture of Experts

Mike's Daily Article - 21.01.25

The paper caught my attention despite my shallow knowledge of time-series(TS) analysis. Mainly because its name includes the phrase "Foundation Models," which is quite a rare beast in the time-series field, unlike in large language models. The reason for this (probably) is the much richer variety of time series that are relatively different compared to natural language.

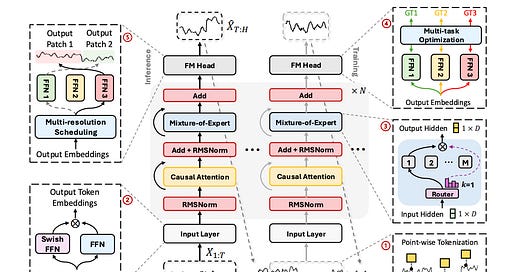

To be honest, I didn't find very interesting architectural innovations in Time-MoE, which is of course based on transformers, however, it has some elements that are different from what we're used to seeing in LLMs. For instance, instead of tokenization and embedding layers based on a token dictionary that we have in LLMs, in the proposed model each token (which is a point in the series) undergoes a non-linear transformation with SwiGLU activation and several linear transformations.

Regarding the transformer layer, the authors use a fairly standard MoE architecture. The only difference that caught my eye was the use of the RMSNorm normalization method that I wasn't familiar with. Besides that, it has all the regular transformer layers including, of course, residual layers.

The final layer of Time-MoE is a bit different from what we're used to seeing in transformers. Since unlike language models, in the TS world we need to predict different time points (say a second, minute, or day ahead), the authors use multiple heads in the last layer. Each head is responsible for predicting at a specific horizon (number of samples ahead). During training, they combine the losses from all heads.

The loss functions in the paper are quite standard too: Huber function, which is the robust version of L2 (doesn't allow reaching very high values). Additionally, there's a regularization term that tries to activate all experts in MoE uniformly. And of course, they trained the model on huge and diverse datasets.

That's it - a short review, and hopefully a clear one too…

https://arxiv.org/pdf/2409.16040