Beyond the Majority Vote: Confidence-Aware Generation

DEEP THINK WITH CONFIDENCE; Mike’s Daily Paper: 29.08.25

Deep Learning Paper Review No. 497 - 3 more reviews to go for the 500th, and today a short review of a paper with a flashy name (which indeed enjoyed significant hype) with a rather intuitive idea that made me wonder how no one has done this before (if that's true).

The paper proposes an entropy-based method for sampling from autoregressive language models (although the proposed approach can be easily extended to models that generate output non-autoregressively, like diffusion-based language models). As you probably know, entropy is a measure of uncertainty and can be used in language models to estimate the model's "degree of confidence" in the output it generates.

Autoregressive language models generate each token based on the distribution of that token given its preceding context. The higher the entropy of the predicted token, which is equal to the negative log of its probability, the higher its uncertainty. That is, as the probability of the token decreases, the uncertainty associated with its selection increases. As mentioned, the authors propose a sampling method based on the average entropy of the tokens in the generated text.

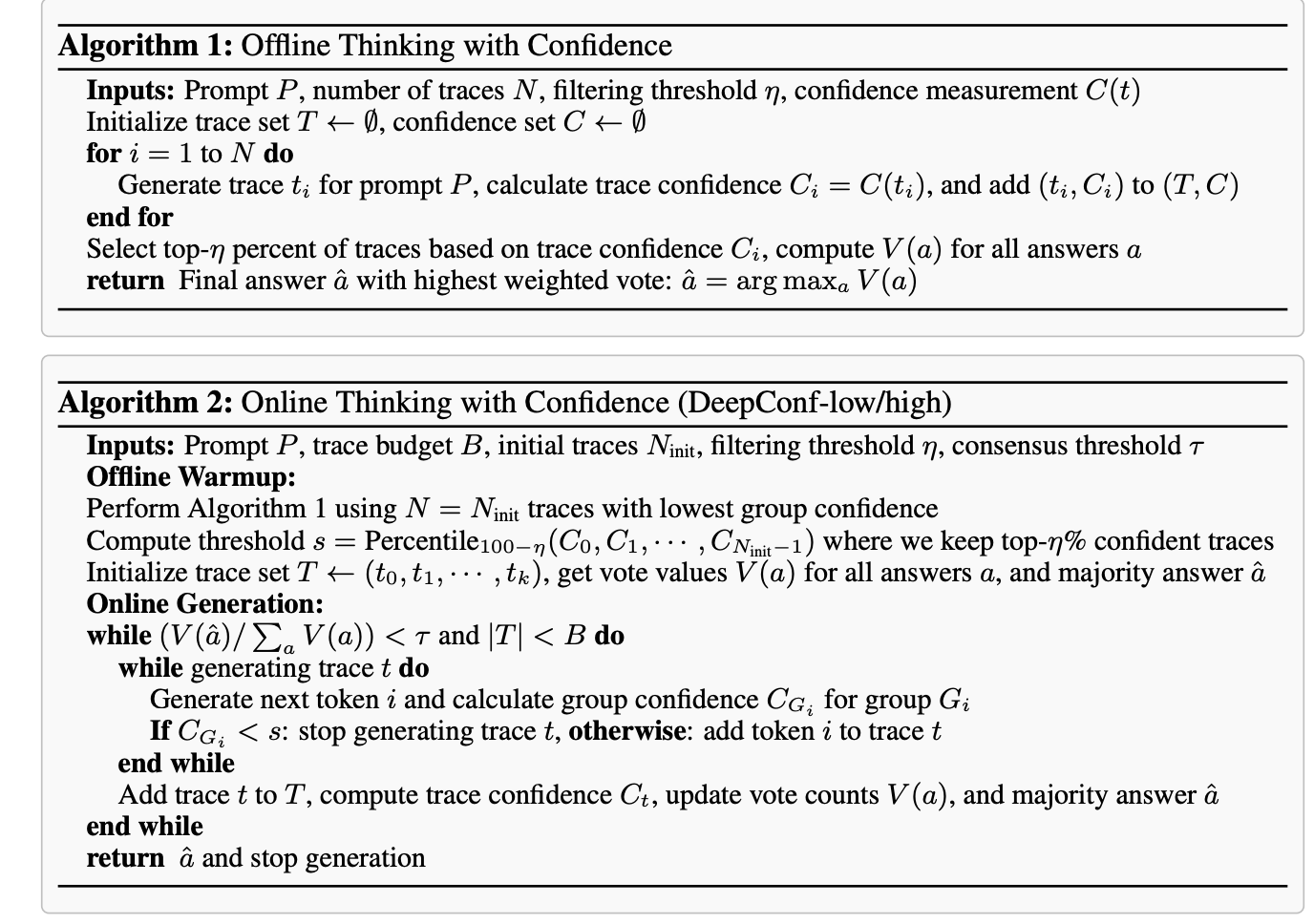

In particular, in cases where the model generates several answers to a mathematical question, we then choose the correct answer not with a simple majority vote (i.e., the final answer that most of the answers converged to). Still, by weighting each answer with its certainty, that is, with the average entropy of all its tokens. This way, answers that the model is very unsure about are filtered out.

The authors also suggest setting a threshold for the maximum uncertainty of the model's answer. If the current average uncertainty of the answer (recalculated for each generated token) exceeds the threshold, the answer is discarded, and the model stops generating it. The threshold is set as a percentile of the uncertainties of the correct answers during a warm-up phase.

Additionally, the authors propose determining the number of answers sampled from the model based on the difficulty of the question. The less "agreement" there is between the results of the different answers, the more answers the model generates, with the answers having too high uncertainty being filtered out, as mentioned.

A nice paper, but it leaves a feeling that I've seen something like this before....

Unfortunately i can't point on any concrete paper as Ive read many hundreds (I guess about 2K or so) of deep learning papers during the last 7 years. In my review I wrote that the authors' approach looks familiar but i can't recover the exact paper from my memory

Could you share the paper that you think is similar to the DeepConf paper? I'd really appreciate your help.